New AWS re:Invent Announcements: Monday Night Live with Peter DeSantis

Another year, another re:Invent Monday Night Live. It comes as no surprise that the re:Invent week kicks off with this session, as it highlights some of the most remarkable engineering achievements that push the limits of cloud computing. While we’re all familiar with the AWS console and the services that AWS provides, this session goes deeper into the underlying components that underpin these services, focusing on the actual silicon, storage, compute, and networking layers.

The session was led by Peter DeSantis, the Senior Vice President of AWS Utility Computing. Let’s see what new announcements Peter had in store for us this year!

The keynote primarily centered around the strategy to bring the benefits of serverless computing to the existing “serverful” software in use today. Peter began by discussing all the benefits of serverless computing and how AWS continues to create services that remove the undifferentiated heavy lifting of provisioning, managing, and maintaining servers. It focused on enhancements in database, compute, caching, and data warehouse capabilities.

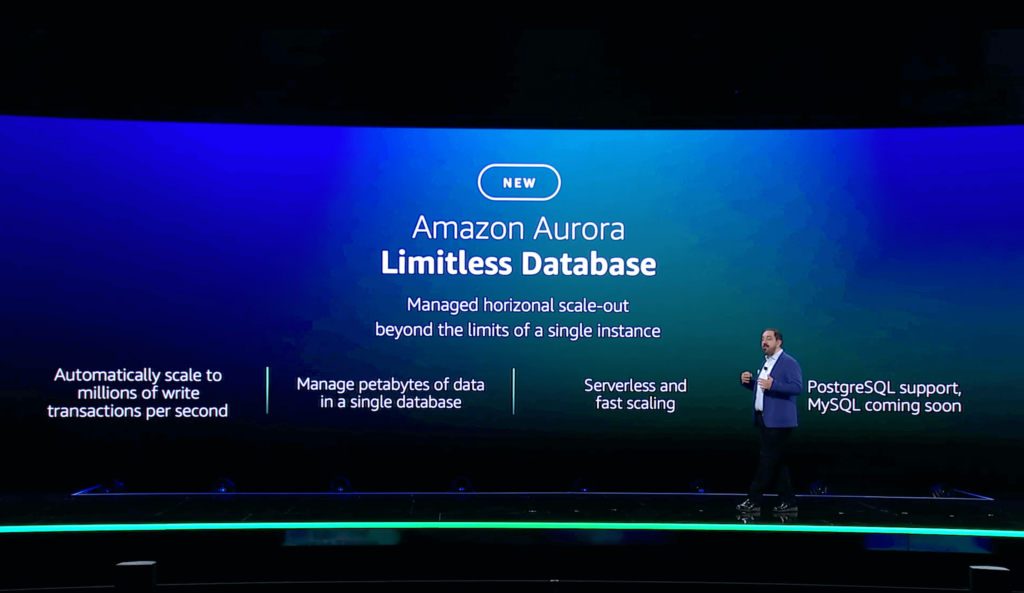

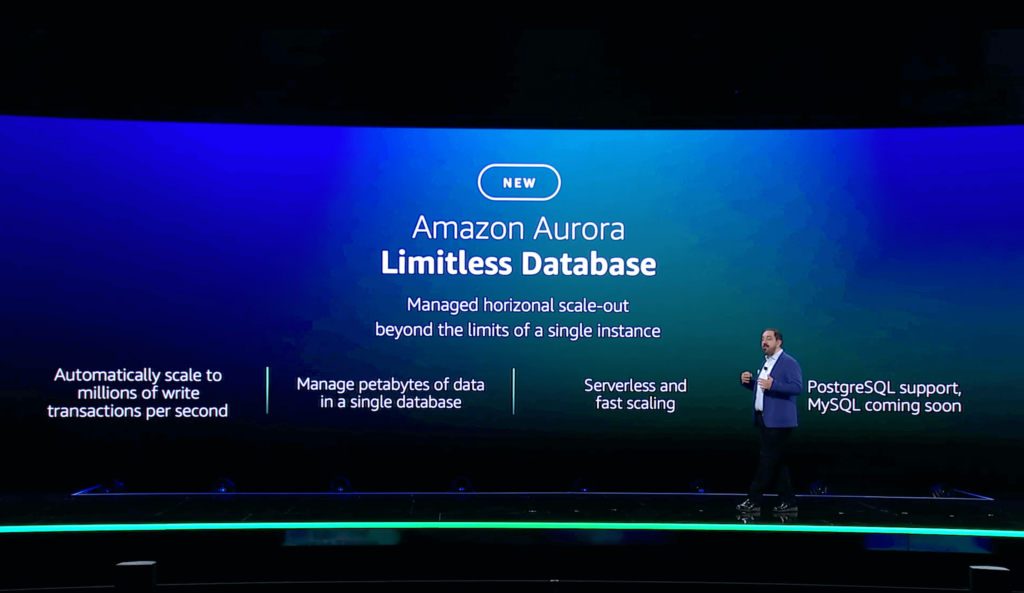

The first announcement is in the category of database improvements. Currently, tons of customers use Amazon Aurora Serverless for its ability to adjust capacity up and down to support hundreds of thousands of transactions.

However, for some customers, this scale is simply not enough. Some customers need to process and manage hundreds of millions of transactions and have to shard their database in order to do so. However, a sharded solution is complex to maintain and requires a lot of time and resources.

This problem got AWS thinking: What would sharding look like in an AWS-managed, serverless world?

Aurora Limitless Database solves this issue, by enabling users to scale the write capacity of their Aurora database beyond the write capacity of a single server. It does this partly through sharding, enabling users to create a Database Shard Group containing shards that each store a subset of the data stored in a database. They’ve also taken into account transactions, and provide transactional consistency across all shards in the database.

The best part is that it removes the burden of managing a sharded solution from the customer, while providing the benefits of parallelization. .

To create Aurora Limitless Database, AWS needed to create a few underlying technologies:

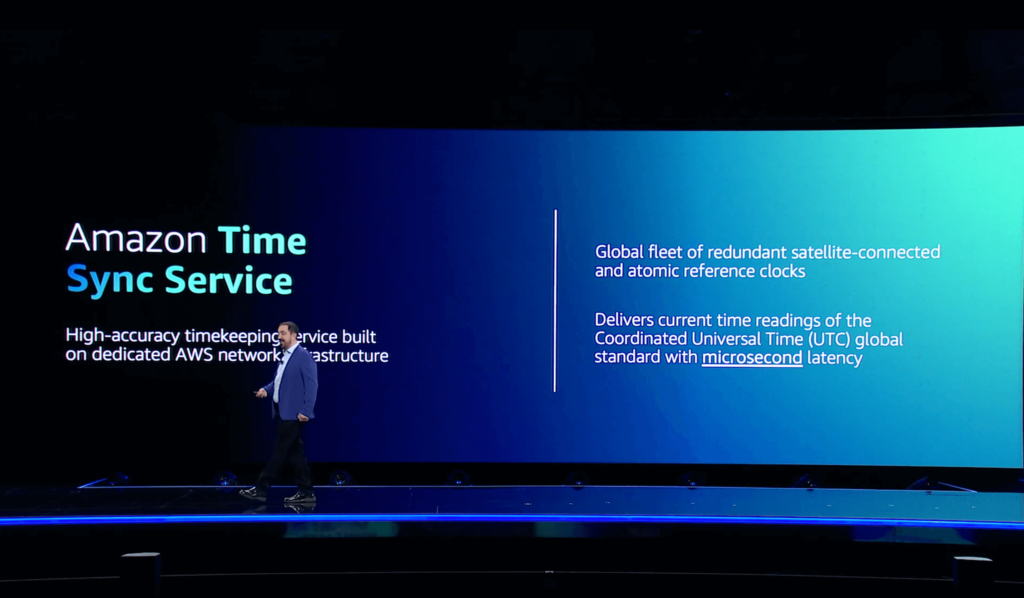

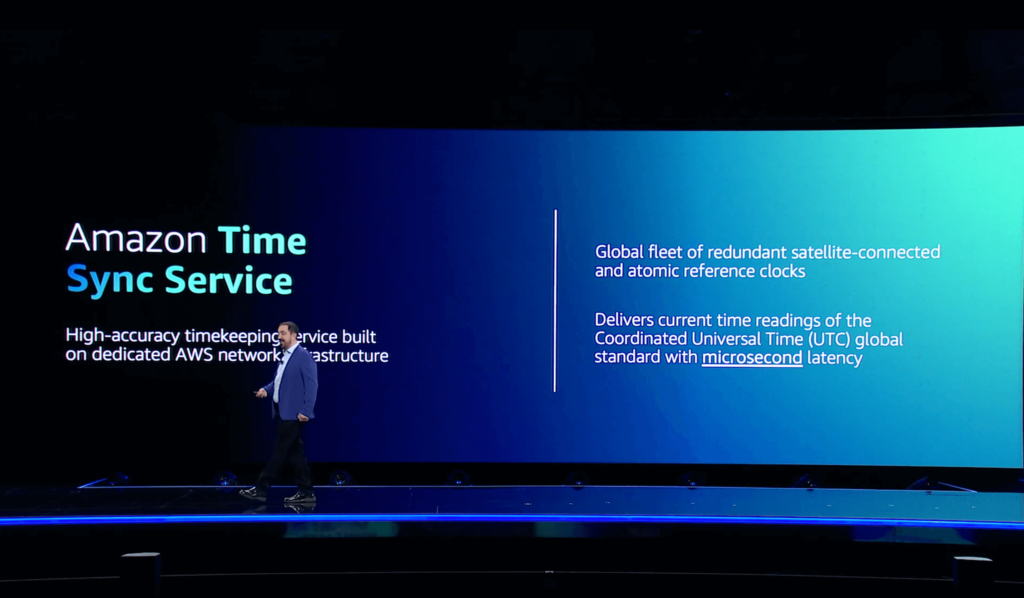

If you’re feeling deja-vu, it’s true that Amazon has already released the Amazon Time Sync Service years ago. It’s a high-accuracy timekeeping service powered by redundant satellite-connected and atomic reference clocks in AWS regions. The old version of the service could deliver current time readings of the Coordinated Universal Time (UTC) global standard with 1 millisecond latency.

The new version, announced today, is an improvement to the already existing service. Now, the service will deliver current time readings of the UTC global standard with microsecond latency. You can take advantage of Amazon Time Sync Service through supported EC2 Instances, enabling you to better increase distributed application transaction speed, more easily order application events, and more!

If you already use the service on supported instances, you will see clock accuracy improve automatically.

Next on the list is a revolution in the caching category. Historically, caches are not very serverless, as the performance of the cache relies on the memory of the server that hosts it. Additionally, there’s a ton of resource planning that goes into caching data. If your cache is too small, you evict useful data. If your cache is too large, you waste money on memory you don’t need. With a serverless cache, both the infrastructure management and resource planning goes away.

This is the problem that Amazon ElastiCache Serverless hopes to solve. It’s a serverless option of ElastiCache that enables you to launch a Redis or Memcached caching solution without having to provision, manage, scale, or monitor a fleet of nodes.

It’s compatible with Redis 7 and Memcached 1.6, it has a median lookup latency of half a millisecond, and it supports up to 5 TB of memory capacity.

Under the hood of Amazon ElastiCache Serverless is a sharded caching solution. This means that the technologies supporting the service are very similar to the technologies that underpin the Aurora Limitless Database. For example, Amazon ElastiCache Serverless uses:

Next up is data warehouses. The existing Amazon Redshift Serverless service makes it easy to provision and scale data warehouse capacity based on query volume. If all queries are similar, this scaling mechanism works really well. However, in cases where you don’t have uniform queries, there may be times when a large complex query slows down the system and impacts other smaller queries.

To solve this problem, Amazon created a new Redshift serverless capability to provide AI-driven scaling and optimizations. The idea is to avoid bogging down a Redshift cluster by predicting and updating Redshift capacity based on anticipated query load. It anticipates this load by analyzing each query, taking into account query structure, data size, and other metrics.

It then uses this query information and determines if the query has been seen before or if it’s a new query. All of this helps Redshift determine the best way to run the query, with efficiency, impact on cluster, and price in mind.

A few years ago, AWS announced the AWS Center for Quantum Computing at the Caltech campus in California. Its primary task is to overcome technical challenges in the quantum computing space… and they certainly have their work cut out for them.

Currently, the quantum computing space has a lot of technical challenges. One of which is that today’s quantum computers are noisy and prone to error.

Unlike general purpose computers that have a binary bit that we compose into more complex structures to build computer systems, quantum computers use an underlying component called a qubit that stores more than just a 0 and 1.

With general purpose computers, a binary bit can lead to bit flip errors. However, these are easily protected against. In the quantum world, you not only have to protect against bit flips, but you also have to protect against phase flips.

The AWS Center for Quantum Computing is focused on implementing quantum error correction more efficiently, so that these errors happen less frequently. To give you an idea of where we’re at today: State of the art quantum computers offer 1 error in every 1000 quantum operations.

In years to come, the AWS team at the Center for Quantum Computing hopes to have just 1 error in every 100 billion quantum operations.

That brings us to the end of the keynote. I always love when AWS Keynotes are centered mainly around improvements to existing services – and that’s exactly what Peter DeSantis offered us today. The best part is: it’s only Monday. There’s so much more to come for AWS re:Invent 2023. Stay tuned!